Ok, this is going to be a real long read, so make sure you have good time, with a black coffee without sugar before you read this material.

ok, let’s start from human language history.

1000s of years ago, there was a state of global humanity when we had no words, alphabets, texts to write, share, debate, fight or conflict upon. There was no way of documenting things but feel emotions. Yes, emotion in itself is a language. LOVE as what most people mix with LUST today – is actually the oldest human language ever existed and still in use to make the next generations of humanity. Hand gestures [Shake hand, touch, namaste, show directions, etc.], body movements [Dance, Sit, Bow, jump, etc.], and facial expressions [Tears, Smile, Anger, etc.] are all a subset of this mother language of all languages.

Someone somewhere tried to document things so that they can help others which eventually gave birth to the second language. CAVE paintings, ART, etc. the expression of love language got shifted into the language of SYMBOLS. Pictorial presentations of MOON, SUN, BIRD, BULL, KING, FIRE etc. started being written on stone using stone carvings, organic paints, etc.

I am making you travels 1000s of years within these paragraphs, so hold you seats tight. Then this language was felt insufficient to hold the kind of information it conveyed, real estate was an ART problem back then, the CANVAS was huge, but the paint brush was a STONE or HUMAN PALM. This is when IDEAS or CONCEPTS too also started being conveyed in it’s own special symbols also called as IDEOGRAMS.

Over a brief period of time, still this journey of language expansion was on. By this time some over smart humans realized this as a tool to fool future humans and control masses using story telling. Till this point RELIGION and GOD was not even a thing. These things came from STORY telling to control humans, traditions, methods, beliefs, are all tools made from use of these written languages, just like someone tells a kid a dark story so that s/he behaves as per one’s choice (good/bad). Language explosion happened with this idea when someone tossed this idea that let’s divide SOUNDS into symbols and LETTERS started rolling out. ALPHABET, and all other languages took birth. Someone wrote good books, someone wrote stories and then started the generation of humans fooling each other for power using bad writing and controlling those who can’t read or judge what’s written on a piece of paper or a book.

10,000 years long story short – today languages are usually used to control other people’s mind. Take this piece right over here, I am trying to explain you something is also a kind of mind control. Again good/bad is other dimension of it. Needs human intelligence on consumer end to find the reality.

Point to take is: WORDS are not intelligence, INTENT is – which is always human brain task to intercept, interpret and respond.

2017, GOOGLE launches the paper of “Attention is all you need“. In this document the transformer architecture is introduced which explains how next words can be predicted by using this attention algorithm. Till this point – the human like responses were not claimed as a feature of it.

During COVID OPENAI launched ChatGPT as you already know what it is, maybe GOOGLE lacked global data data or don’t wanted to do it on their own.

I don’t see these LLM systems separate from the capabilities of what any other narrow AI when it comes to applied LLMs. In fact narrow AI was more SUCCINT in doing it’s given specific system task which is truly helpful for enterprise problem solving than making quiz, chat, writing AI email templates, or jargon replies. The failure rate was low and predictable in narrow AI.

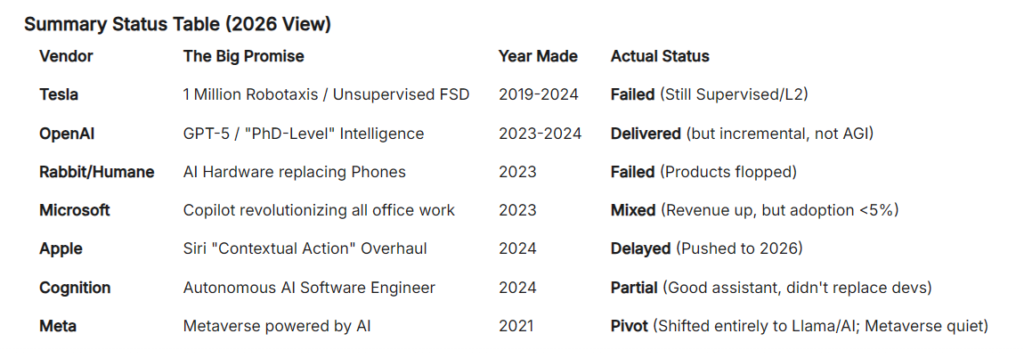

Let’s look at some claims businesses have made around such AI technologies:

- In-situ lab testing in medical research, new medicines, new cures, death decline? NO STATS!

- AI based pharma risk control platforms, same as above.

- AI based stock SIP funds, are people earning, are stocks turning green for you? NOPE.

- AI based software generation, are global outages decreasing or increasing? You know better as a consumer.

but the bigger reality?

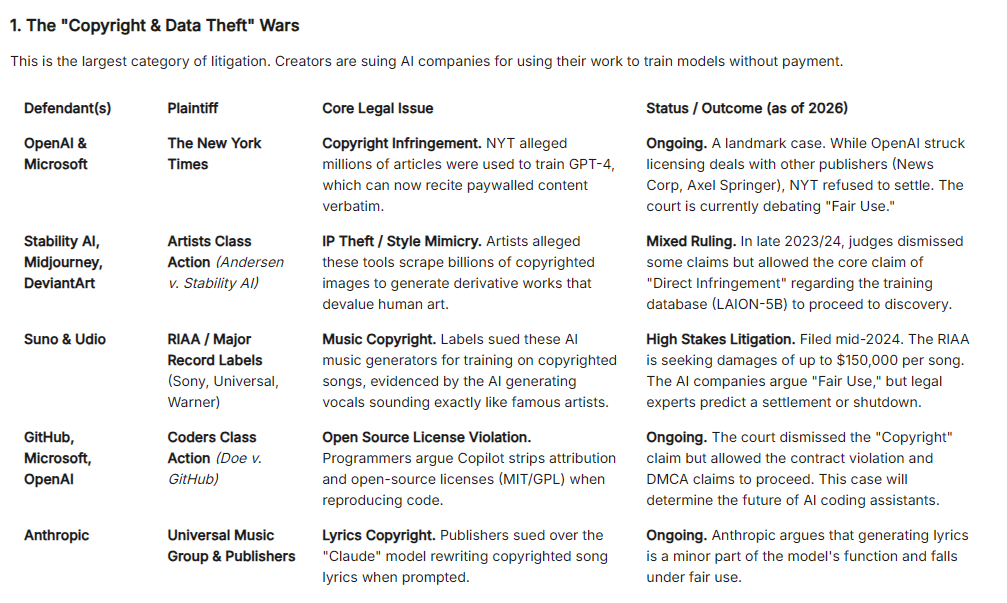

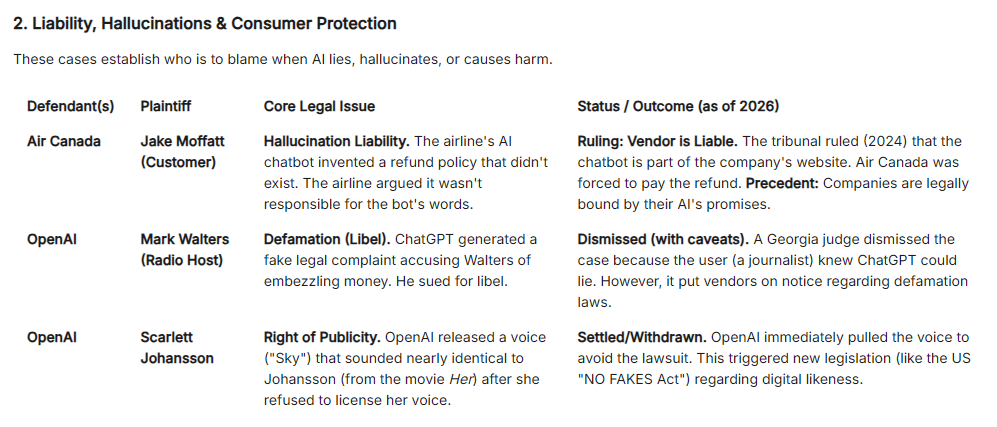

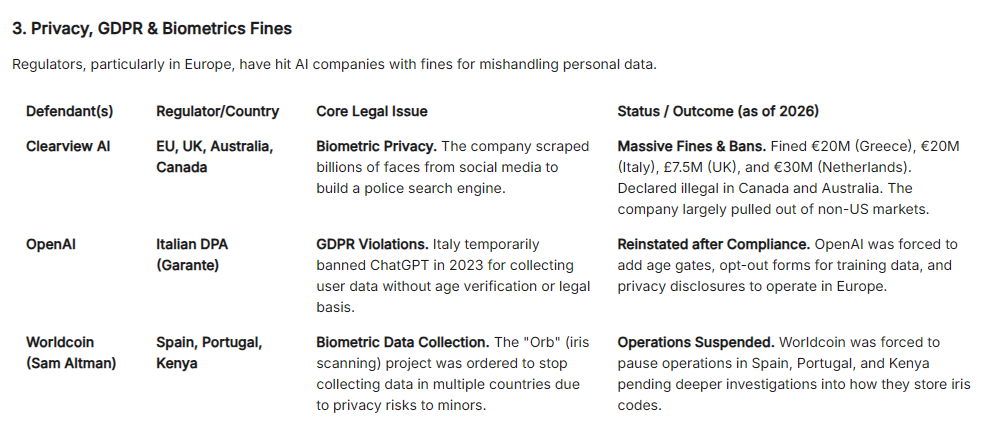

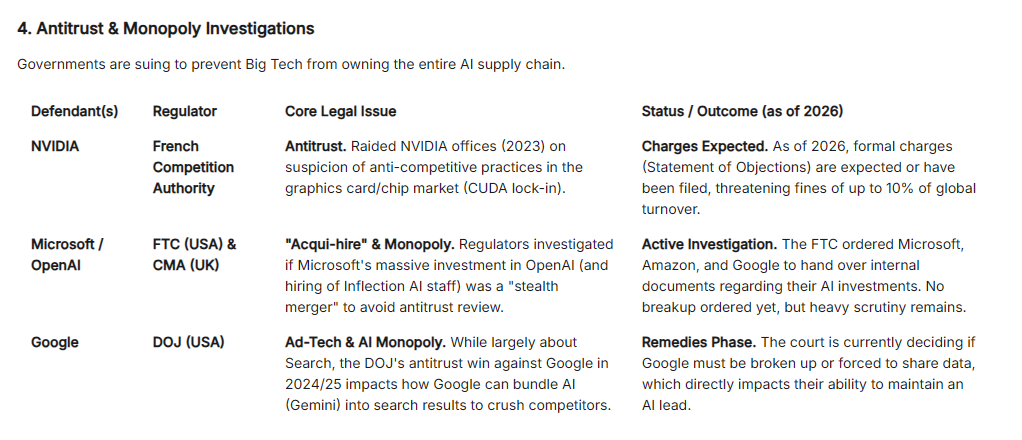

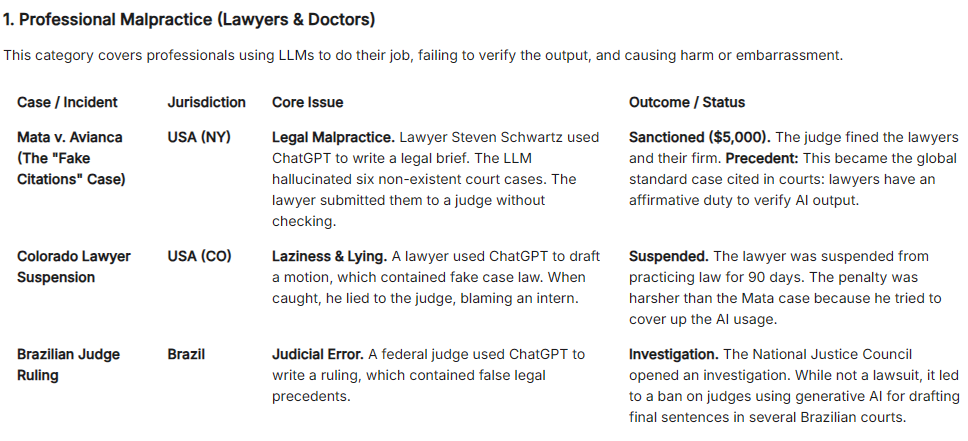

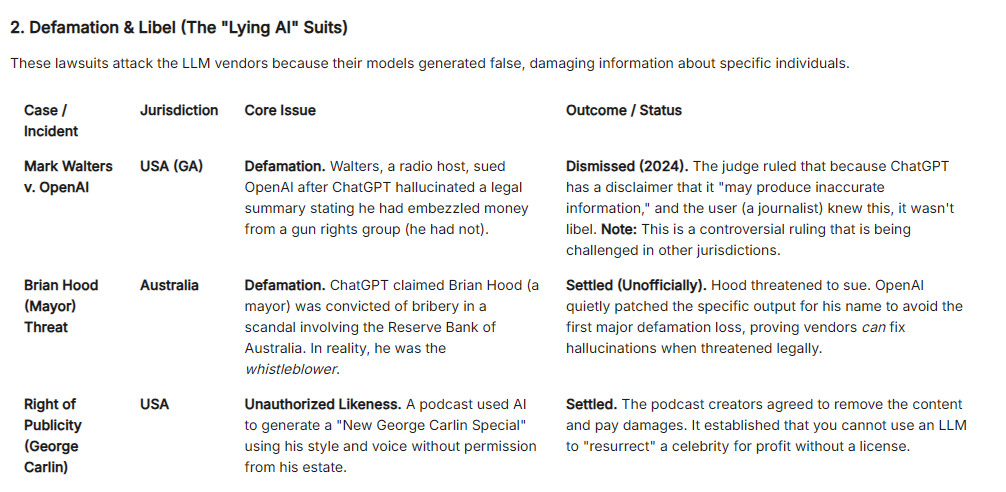

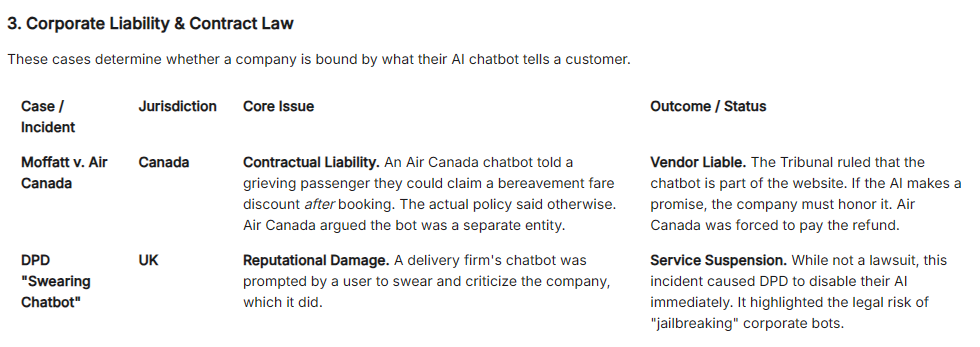

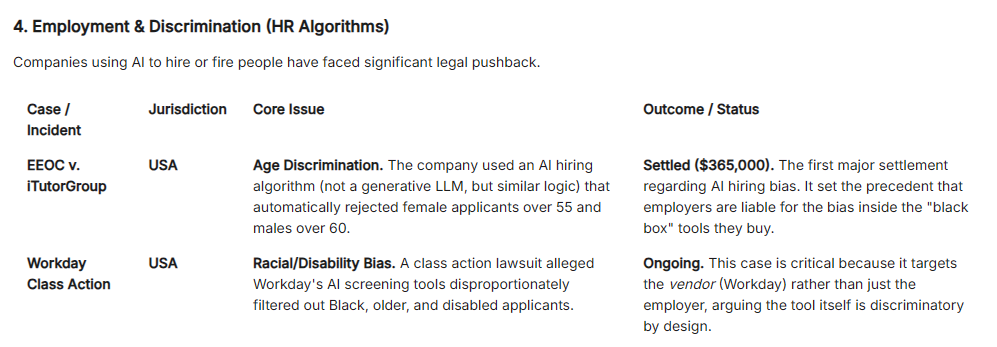

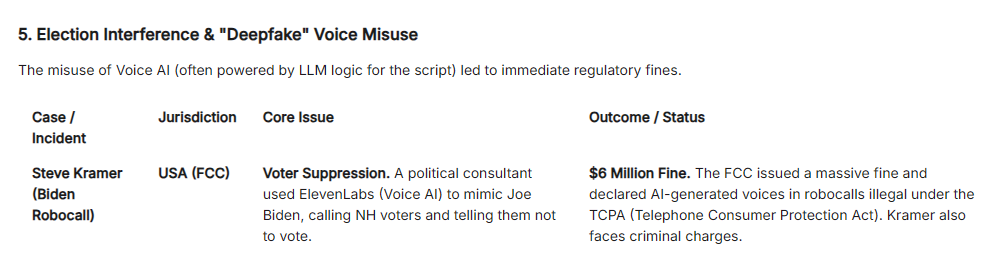

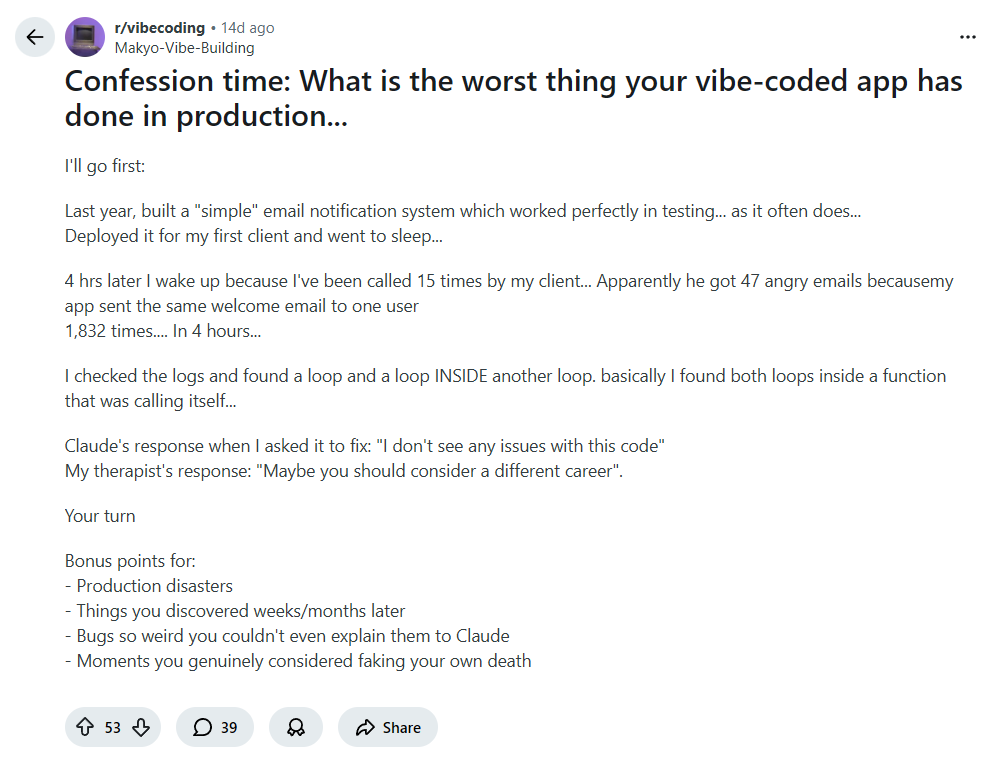

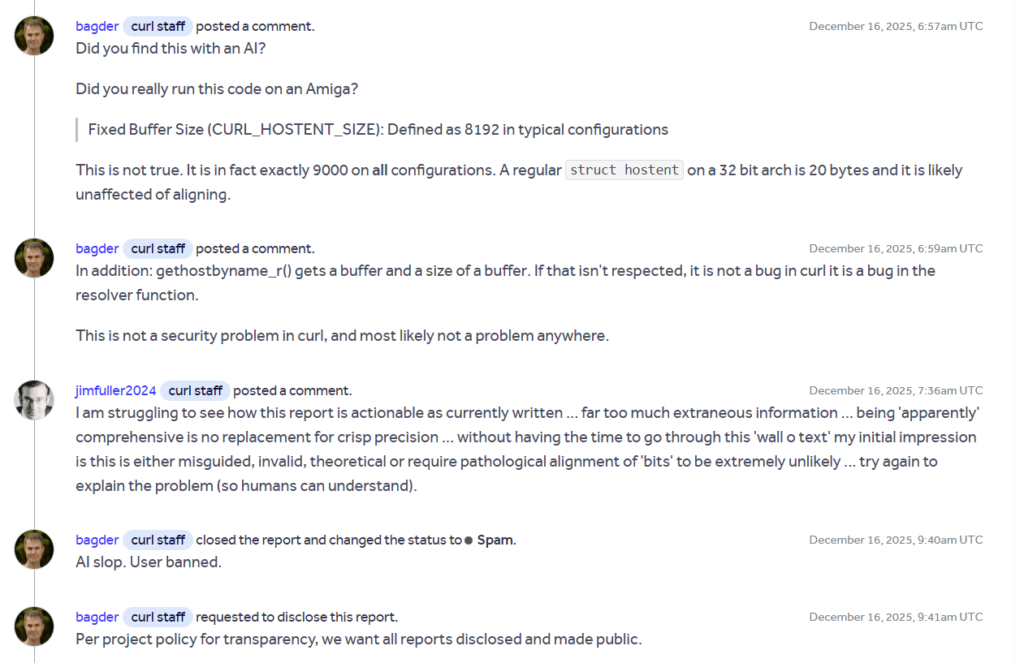

Wait, these are just the big cases. I have some more things to highlight, here

That BTW is a legit proof of AI SLOPS that excellent humans at CURL have to process regularly due to so called LLM intelligence [TRASH].

I have many many such examples, and my own experiences under the hood which brings me to my next pointers:

- LLM in enterprise is not an opportunity, it’s a RISK to your businesses.

- It risks your code, people, culture, security posture, employee mental health, long term sustainability and data protection

- That very sweet sounding vibe coding product is not just looking at your 1 field, but getting text dumps of entire business activities. Unless an AI model is self hosted, it is not SAFE for any enterprise.

- AI models generate text, which can for sure be integrated into enterprise stack in an effective, and a thoughtful way. But this use can never go beyond 10-15% of entire technology journey of any enterprise.

- AI agents and MCP add another level of risks, which are indefinitely self expanding mess from security point of view.

- Every AI vendors says that AI may make mistakes, double check output – which is a human responsibility.

And finally the AGI/ASI claims.

First let’s understand what AGI or ASI means:

This AGI and ASI part is so funny to me, that whenever someone talks about it – I can’t control my laughter. This remind me a school time story of Akbar and Birbal, when

BIRBAL was asked: “Where is center of earth?”

To which BIRBAL answered somewhat like this: “He took a wooden stick, kept and pointed randomly on the ground, and said – this is center of earth.”

Akbar said: Explain.

Birbal said, dig a hole.

To claim AGI or ASI, you have to first find a human who is AVERAGE coworker or smartest human on planet.

Human intelligence in itself is subjective, contextual and time dependent.

When ChatGPT came out, this one word was commonly discussed: COLLECTIVE INTELLIGENCE

So, a statistical numerical model which has fixed weights, and has BILLIONS of parameters, is collective intelligence?

What about the collective dumbness that go along with that?

To fix that model itself, one will need 10000 years of human intelligence, if human intelligence itself is a real thing.

What makes me sad is that many intellectuals, be it artists, software programmers, product owners, animators are all in a constant self judgement loop due to these examples of half brain executions. Which brings me the final explanations of this LLM dissection:

- When you write a big prompt as a instructions, you as a human are perceiving the machine as a human being. But, what you don’t realize is that the numbered list of instructions, the sequence, flow you give to the model, it doesn’t understand the (1.) in the beginning of first point and the contextual importance of (1) in the point itself.

- Yes, many vendors have tried, fixed and addressed many such loopholes, but what you don’t understand is that these are blanket fine tunings done to impress the customers. If you make a prompt flow assuming its a human like machine which understands, you are putting your business, work and serious decisions on risk. One SEED, one unique pathway, and the LLM can start behaving like a random error generator.. No matter what guards, prompts, or fine tunings are done.

- Why? Because it’s again not a thinking machine for real, it’s an algorithm that uses mathematical models that hints the next word. Next word hinting is not intelligence of any kind, it might help humans but there are many complexities down the line. Thinking mode is abusing the context you have purchased with multiple self looping prompts, just to make the AI responses look better to you, which again CAN GENERATE MISTAKES as per the vendor terms and conditions.

- When you say, SPICY, the model doesn’t understand what SPICY really means, it has data and a way to mashup things. Useful yes! but it’s not human intelligence, there is lack of taste buds in these lines. Human intelligence is not just about speaking. Even Sheldon had emotions, that’s the fun part. Human intelligence not just speaks by words, it speaks by values, emotions, experiences and unique individual identities.

- A very good term AGI/ASI/LLM makers use is – HUMAN CONGINITIVE abilities. If you are a biologist or scientist, you can explain this better of how, speaking alone is not a human cognitive ability. When humans speak, whole body is in a collaboration to put intent into those word context. Very very different that making a bot sound like a human.

- And we have also experienced these – AI leaking emails templates, fake ads, fake messages, articles, posts, engagement farming, etc. all with those AI trash.

- One thing I like to emphasize: this is not just AI trash, probably even the human generating, and thinking the context, length of output, matter in it, etc. is smart enough to be pasted in public – is probably a trash thinker too. Which is why many people love LLM in first place. You can mimic being intellectual with these toys, those who are not intellectual enough to judge such contents possibly react to it on internet and this works like a DRUG to your brain to get attention, same effect as SOCIAL NETWORKS. This is a decent mental game being played behind this LLM technology, that many are not focusing on.

So, if you are worried for jobs, your work, skills, etc. due to AI – take my words, a human systems architect’s word: You are relevant, no matter which version of XYZ model is released in the coming days, no matter where are you in your journey. If you give up, that’s exactly what any AI vendor will wish to so that their sales can go up – demoralize humans intelligence so that they hook to thinking models. It’s addictive.

Yes, it’s not just an intelligence game, it’s a mental chess play that makes you loose when you believe in machine intelligence.

If you haven’t read this, please do read this. This is how the game works: https://en.wikipedia.org/wiki/Chinese_room

But Shubham, what about ethical use cases? There must be some right?

Yes – indeed this is exactly where everyone should be focusing on, especially enterprises and enterprise architects.

Use LLMs to flag data issues clubbed with human review, I love how Framer has used a simple process to start with a boiler template and called it WIREFRAMER, I love when LLM is used to empower people at work and customers on the other side instead of forcing anyone to use AI. I love when anyone integrates LLMs with full awareness of what they are doing, and implications of same.

Don’t use LLM for speed promises, or intelligence promises, use it for helping humans achieve better results in whatever they do with it. Humans will use AI, not the other way around – no matter which technology is invented. Yes, even in quantum realm.

Have a great day.